When using Chinese for searching, it is not always possible to find the desired results.

Sometimes it works fine, while other times it doesn’t work. Here’s an example:

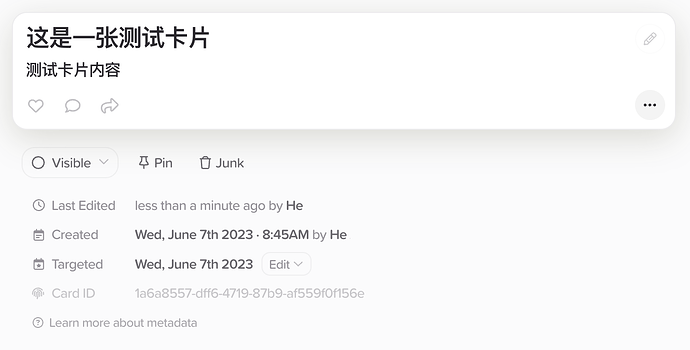

Card title: 这是一张测试卡片

这是 ![]()

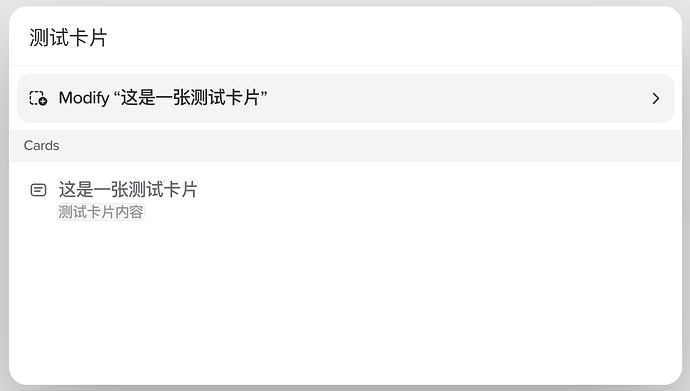

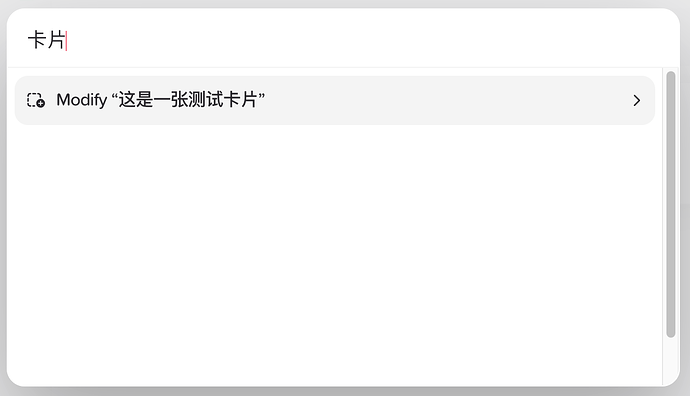

一张 ![]()

这是一 ![]()

测试卡片 ![]()

这是一张 ![]()

一张测试卡 ![]()

是一张测试卡 ![]()

一张测试卡片 ![]()

Hi @hahahumble, thanks for the in-depth bug report and screenshots, that’s very helpful – we will look into this!

Investigating this a bit now and would like to follow up on your examples @hahahumble. Is your desire that your example text (“this is a test card”) be matched against all the example queries?

Segmenting the Chinese into English equivalent words I think would result in 这是, 一张, 测试, 卡片 – right? So would you actually want query 3 (这是一) to match against the given card name? And is fuzziness required? I’m not entirely sure what will be possible but would like to understand the desired outcomes as much as possible. I haven’t taken any Chinese since Middle School so mine is a bit rusty.

Yes, your understanding is correct. I want my example text to match all sample queries.

However, there is no need for word segmentation in Chinese, as long as consecutive Chinese characters match.

Yes.

Fuzzy search is not required, but it needs to be able to match multiple places after adding Spaces between Chinese characters.

I have written a small piece of code that may help you:

let data = ['北京天安门', '上海外滩', '广州珠江', '深圳福田', '天安门广场'];

let searchTerm = '门 天安';

// Split search terms into arrays based on spaces

let searchTerms = searchTerm.split(' ');

let results = data.filter(item => {

// Check if every search term is included in the item

return searchTerms.every(term => item.includes(term));

});

console.log(results); // Output: ['北京天安门', '天安门广场']

Nice example – super helpful! Unfortunately most search terms are not going to contain spaces, right? So we can’t rely on that for parsing. That is why I’m thinking a segmentation by semantic word (rather than just character) is probably necessary.

The search system introduced in SN 2.4 works based on tokenization rather than inclusive string matching, both for performance (an index can be maintained) and because in general it is helpful if the order of words does not need to match. e.g. in English you would want the search term “performance notes” to match a card called “notes on performance”.

To do the same in Chinese (or any logogram-using language), we could just split the string into characters, e.g. 这是一张测试卡片 becomes 这 + 是 + 一 + 张 + 测 + 试 + 卡 + 片, but then all actual meaning of words is lost. This is fine to find all matches that contain some characters, but it means that the search system will give worse relevance for Chinese text. To try to put this into a concrete example, if you had two cards with titles of (sorry in advance, I know this example is nonsense):

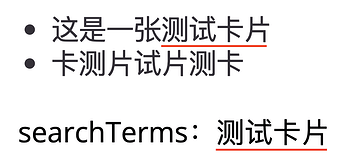

- 这是一张测试卡片

- 卡测片试片测卡

And I then search for 测试卡片, the second card would be ranked above the first card because it has more occurrences of the individual characters within it and we tokenized on individual characters, even though the first card is clearly more semantically relevant.

Yes this is a very contrived/nonsense example, but I think issues like this would be much more common when you consider an entire card in Chinese and not just the title. Does that sound about right? Or do you think the relevance issue is still not important?

Here, only the first result will appear because “测试卡片” is not included in the second result:

In most cases, Chinese searches based on string matching is sufficient, but considering the card content, the search may be slower, and using word tokenization can be a better choice.

Word tokenization in Chinese is quite challenging, and if the quality of tokenization is poor, the search results will be unsatisfactory. Here are a few Chinese word tokenization tools that have relatively good performance, which you can consider:

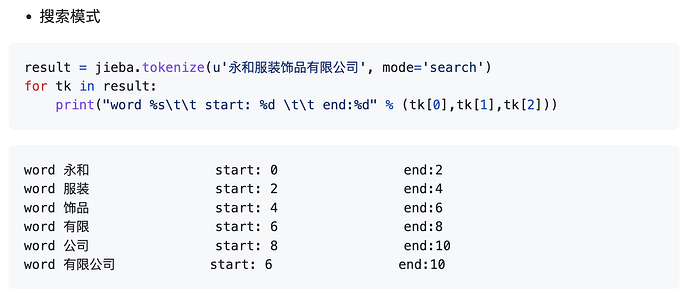

These types of tools usually come with search modes that further tokenize long words, which can increasing accuracy. You can consider use this to build the index.

Yep, I was looking at jieba. Unfortunately it seems like many of the well-maintained libraries are for languages like Python, Go, or Rust, when for Supernotes it realistically needs to run in a browser environment (JS) since search is fully offline now. There are a number of cludges (like compiling the Rust lib to WASM) and ports (jieba-js) that people seem to have tried. I will dig further and see which give good results with good performance characteristics.

I would also like to solve this for Japanese / Korean / etc while I’m at it, but it seems like the segmenters aren’t generic enough to just swap out dictionaries.

As you say, string inclusion works well-enough, but the architecture of the new search doesn’t really allow for that, though it might be possible to switch from a tokenized search to inclusive search if terms are detected as Chinese. I think that path is unlikely to work though, as it would probably break search for mixed content (e.g. Chinese and English text in one card) or incur a very high performance cost.

Good news! Found a solution for this that works well and even works on mixed content.

Unfortunately it won’t work in Firefox, but it will work just fine in all the apps and Chrome / Safari.

Will be shipping it in 2.4.3 next week.